We often hear organizations ask how they can drive more insights out of their connected devices. Though the Internet of Things (IoT) has been a buzzword for the last few years, many organizations are still struggling through the headache of implementing an IoT pilot or solution. Most of the clients we engage with start with one of the following statements:

- “We want to proactively manage performance instead of looking at things in retrospect.”

- “Where do we start? We have different technology on different machines, different systems driving those machines, and proprietary business processes running those machines.”

- “How can we stream fresh data and preserve it at the same time?”

- “What should we be measuring?”

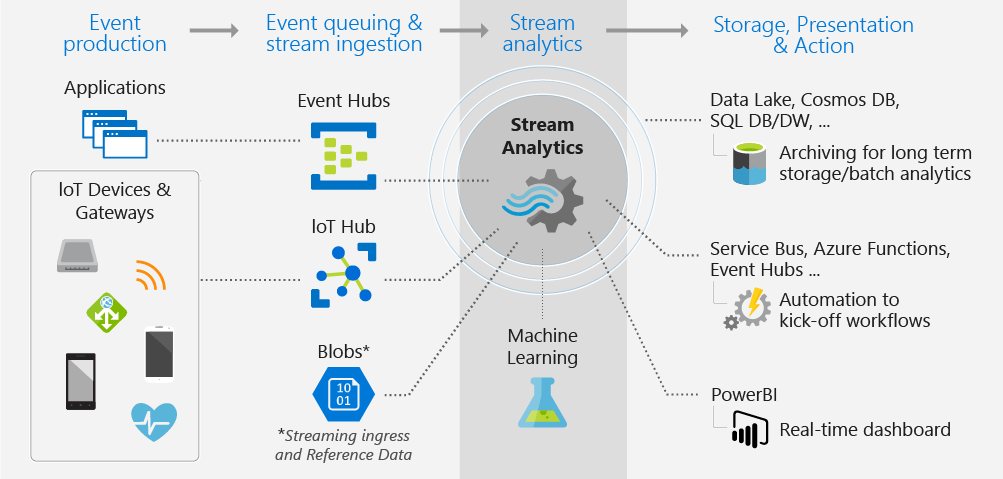

Through these conversations, we developed a framework using multiple Azure components that allows our clients to implement a scalable solution to source, stream, and show their machine performance in real-time. The solution entails using Azure Functions to source the events, Event Hubs to capture and persist the data, Stream Analytics for anomaly detection, and live data displays in a Power BI dashboard and report.

5 Benefits of Implementing the IoT Framework

- Connect to disparate IoT devices using event-driven and scalable Azure Functions.

- Using Event Hubs Capture, organizations can store their streaming data sources in Azure Data Lake for further analysis and integration to the broader ecosystem.

- Functions within Stream Analytics allow for real-time, machine-learning-driven anomaly detection.

- Stream Analytics jobs for real-time reporting enables end users to consume data with sub-minute latency rather an out-of-date batch process.

- Power BI allows you to build reporting customized to your business process while integrating external reference data for key performance indicator analysis.

Plus, the entire solution can be proven out by spending as little as $6 a day.

How Each of the Components Fit Into the IoT Landscape:

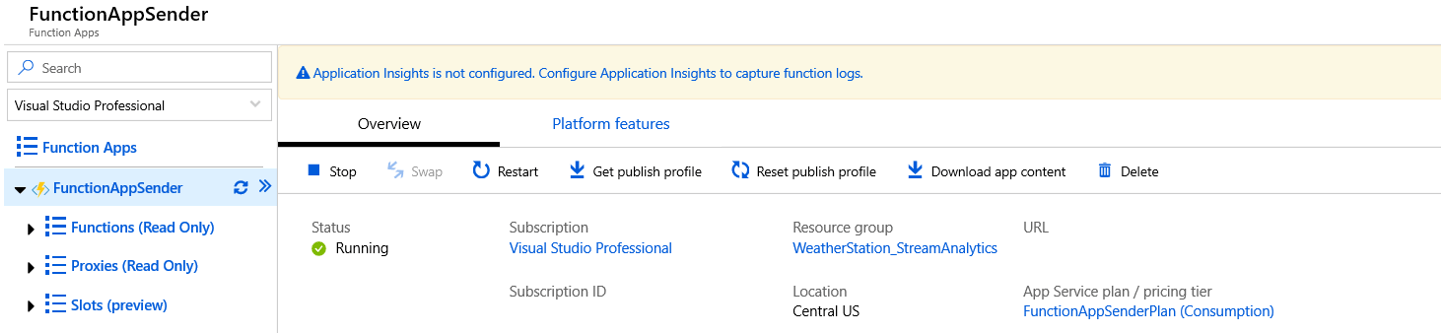

Azure Functions

The function application plays an important role for those devices that can’t run IoT Edge: it allows you to ingest messages from disparate machines at scale. Triggers in the function allow for messages to be sent into the cloud using standard HTTP communication. Messages are then passed to Event Hub queueing service.

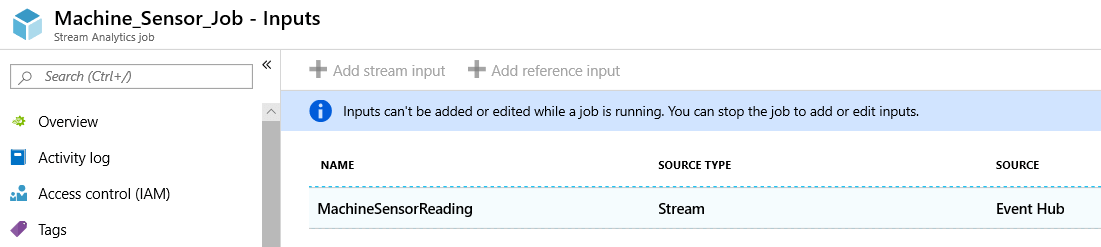

Event Hubs

Paired with Capture, Event Hubs give clients the ability to process messages at scale while persisting those events to Azure Data Lake. The persisted data can then be integrated into a database or data warehouse with a typical batch processing using ETL tools like Data Factory or SSIS. While that’s being captured, the live message stream is concurrently sent to a Stream Analytics job to be processed.

Stream Analytics

Microsoft has reduced a lot of the complexity within the job by creating a SQL-like interface for the DML with its own library of supported commands that feel a lot like T-SQL. The job can server multiple roles depending on how it’s configured.

To me, Stream Analytics Functions feel like scalar functions. It allows real-time machine learning to be processed on your messages for anomaly detection and sediment analysis using the Cognitive Services APIs or an Azure ML endpoint of your choice. You can also implement business logic in these jobs to control which messages are sent and apply further analysis using reference data sources. Additionally, you can write the output of jobs to multiple locations (the Power BI service, for example).

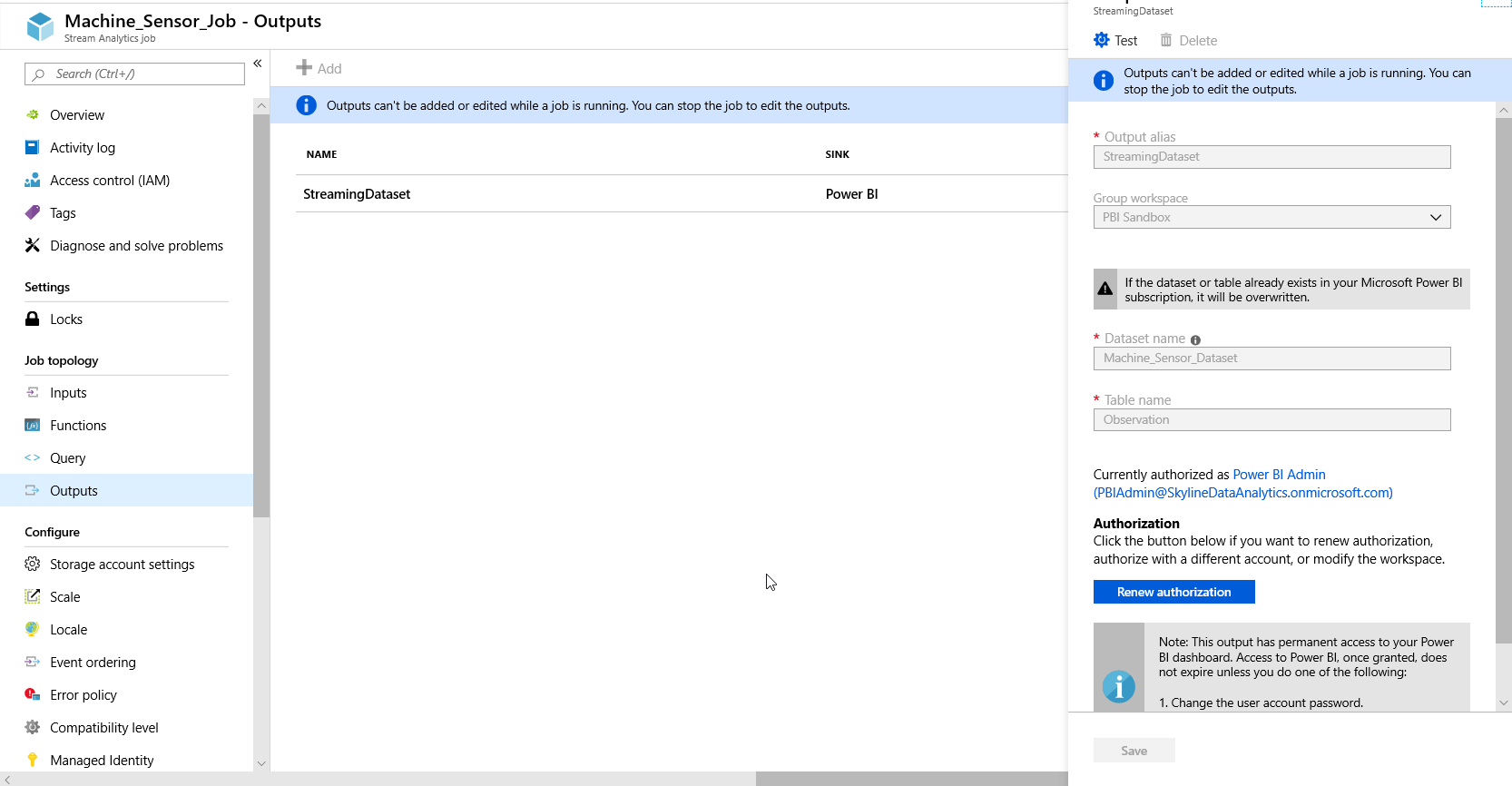

Streaming Live Data to Power BI

The Stream Analytics job sends the messages to a Power BI dataset using the Power BI REST API. Once configured, the dataset will appear in the group workspace with the latest information generated by the stream analytics job. The Power BI output automatically sets the dataset to a FIFO retention policy for the first 200,000 rows.

Gotchas:

- Power BI Output for Stream Analytics only allows for 75 fields to be configured.

- All the data types for the Power BI Output need to be defined in the Query.

- It’s best practice to authenticate using a Service Principal from the Stream Analytics Output to Power BI.

- Data in a Power BI streaming dataset can only be removed using the REST API. Don’t try to delete the dataset and republish with the same name (your existing reports will break).

- Currently, Azure Data Lake Gen2 is not an option for Event Hubs Capture, but the rumors that it’s coming are out there.

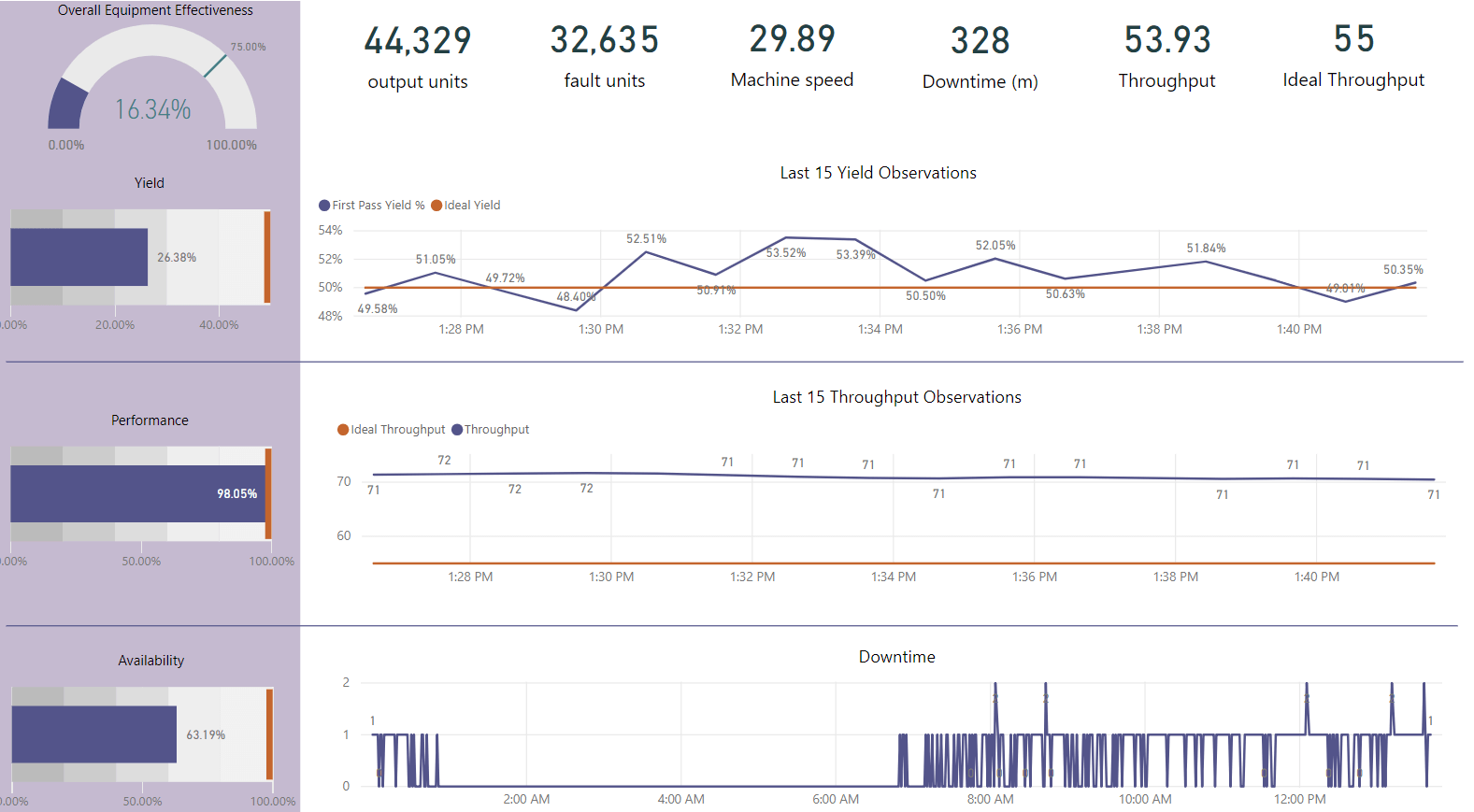

The IoT Solution in Action

Conclusion

Business moves at the speed of communication. Modern cloud architecture empowers decision-makers by giving them real-time access to their most critical information. The value of IoT comes when an organization can move past an initial pilot, and their architecture allows them to scale while accommodating a broad range of use cases. The Azure services in our framework can deliver a repeatable solution at scale, which is important for reducing the learning curve and time to value.

Share on